You are running an A/B test (or multivariate test) but are in a hurry to make a decision to pick the winning page. Should you pick a winner based on initial few days of the data before your tool has actually declared a clear winner? This question comes up quite often during the conversations with the clients.

I always warn against such an approach and advice to be patient and get statistically significant results before pulling a plug on an underperforming variation o declaring a winner. I wanted to share few graphs with you to illustrate my point.

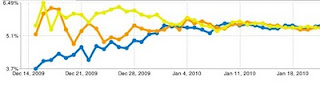

The following example is from a test that I am currently running.

First Two Weeks: If you make a decision without completely getting through the test, you will pick “Yellow” as the winner while declaring “Blue” a loser.

Month Later: There does not seem to be a clear winner.

Few More Days Later: Seems like “Blue” is trending higher. Should you pick “Blue” now?

Finally: We don’t have a clear winner yet.

As you can see, picking a winner or dropping a loser in the early stages of test, without having a statistical significant result, would have been a wrong decision.

Comments? Questions?

Looking to fill your Web Analytics or Online Marketing position?

Post your open jobs on Web Analytics Job Board

--------------------------------------------------------------------------

Between two sets in an A/B split test, if the population of each set is sufficiently large and the difference in response rates is statistically significant, how will you know that the results may change in the future and one should not take a call based on one test?

ReplyDeleteHow many days should you be running the test to be sure that the results are statistically significant and will not change in the future?

This has to be the best explaining article on statistically significant data for a/b testing.

ReplyDeleteOne of those cases when an image is worth 1000 calculations :)

Thank you.

Not only do you not have a winner, you might incorrectly choose the "blue" as the loser. Possible scenario is that Blue is some new treatment that users need to get used to or some new treatments are "exciting" or novel to start with but that effect doesn't last.

ReplyDeleteI completely agree with your conclusions here.

Our paper http://exp-platform.com/hippo_long.aspx

talks in depth about Novelty and Primacy effects.

While i agree with you conclusion, and prefer to have a stat.sig results, here is my question: would we have made a huge error by selecting yellow early (if we had no time to test any longer) as it seems to work as good as any of the other options...

ReplyDeleteExcellent post, Anil. It's so critically important we understand significance before just quickly making decisions on very early results. I wrote about this a bit in a post called "Wanna be better with metrics? Watch more poker and less baseball" (http://www.retailshakennotstirred.com/retail-shaken-not-stirred/2009/11/wanna-better-understand-metrics-watch-more-poker-and-less-baseball.html) and in that post included a free spreadsheet that can be used to determine statistical significance. I thought your readers might find it useful.

ReplyDeleteThanks for an excellent post!

This can also happen if your client has trend shifts in their traffic stream - of course you have to draw the line at some point or another or you'll be in perpetual testing mode forever.

ReplyDeleteHaving said that, there are SOME times when the test page completely tanks or smokes the original page - but in most instances I agree it's better to wait it out.

I like the post. short and sweet.

Anil, nice post! But some would argue that picking yellow early wouldn't have a big negative impact (based on the graphs) and indeed you would be applying testing correctly - test, fail/succeed quickly, and then retest. It would be interesting to see the analysis around when testers must make a decision and when it pays to wait.

ReplyDeleteNice post. I think even more important than letting your AB tool pick a winner for you, you should know the statistics behind the calculations so that your results are tool independent.

ReplyDeleteGreat post!

ReplyDeleteThe variations are trending so closely - makes me think you need to go back to the drawing board and start over!

http://bit.ly/a2uVxU

Suarbah, Once you reach statistical significance, the results should not change, assuming all other variables don't change.

ReplyDeleteThis example shows that results can change early on and you should not make decisions in a hurry without getting statistically significant results.

Google has a calculator that might help, https://www.google.com/analytics/siteopt/siteopt/help/calculator.html

Barbara,

ReplyDeleteSince the test has not completed yet I can not say I picking yellow would be a mistake or not. The point I am trying to make is that you have to wait for statistically significant results before declaring a winner (or looser) as results can change (as the chart shows).

Giadascript, the test is still running. All the treatments are getting equal traffic.

ReplyDeleteSuresh,

ReplyDeleteI always advice to wait but there are cases when the test runs too long without giving any conclusive results in that case you need to test a different variation.

In this case it seems like picking yellow might not have been a wrong decision but it would not have been the right decision either. (Also see my answers above).

Jason, I agree with you.

ReplyDeleteI think a problem with the null hypothesis formalism is that can ironically paralyze decision making. You can get stuck worring that you are picking the 'wrong' answer. However, it might be better to think about the decision problem in terms of revenue maximizing (or minimizing regret) rather than winners and losers or right ones and wrong ones.

ReplyDeleteA couple of things to think about before going the null hypotheses route:

1)Opportunity Costs - it costs you to learn, so you want to do it efficiently. Sig testing as discussed above does not account for the lost reward by playing suboptimal options.

2)The internet is real-time! There is often no need to arbitrarily pick a time (picking a confidence level is arbitrary) to force yourself to play a pure strategy from then on. Think in continuous terms rather than in discrete terms. There is no reason you can't keep all options available but decrease the frequency with which they are played as you move through time and learn more about them. So play a mixed (portfolio) strategy. Which brings me to the last point

3) The environment need not be stationary. More likely than not the environment that your application is making its decisions in is non-stationary, so the notion of a 'winner' may not make any sense.

Often, these types of decision problems can be better modeled as a bandit problem rather than as a hypothesis test problem.

Of course it depends on what you want to learn and why. If you need to make generalizations about the world, than you prob want to get some confidence around your statements and should run more formal tests, but if you just want to optimize an online process, than I'm not sure that sig testing is always the way to go. I can guarantee that Google, Yahoo! etc are not running null hypothesis tests on Ad placements - they are running bandits.

Thanks

Thanks for the post. Is it Google website optimizer test? We had several tests with this tool & every time got the same charts: during 1st two weeks of testing there's a significant difference between combinations, in 2 weeks the chart shows that there's no difference between them at all.

ReplyDeleteHi Olga,

ReplyDeleteYes this is from GWO. Will you be able to send me a copy of your chart?